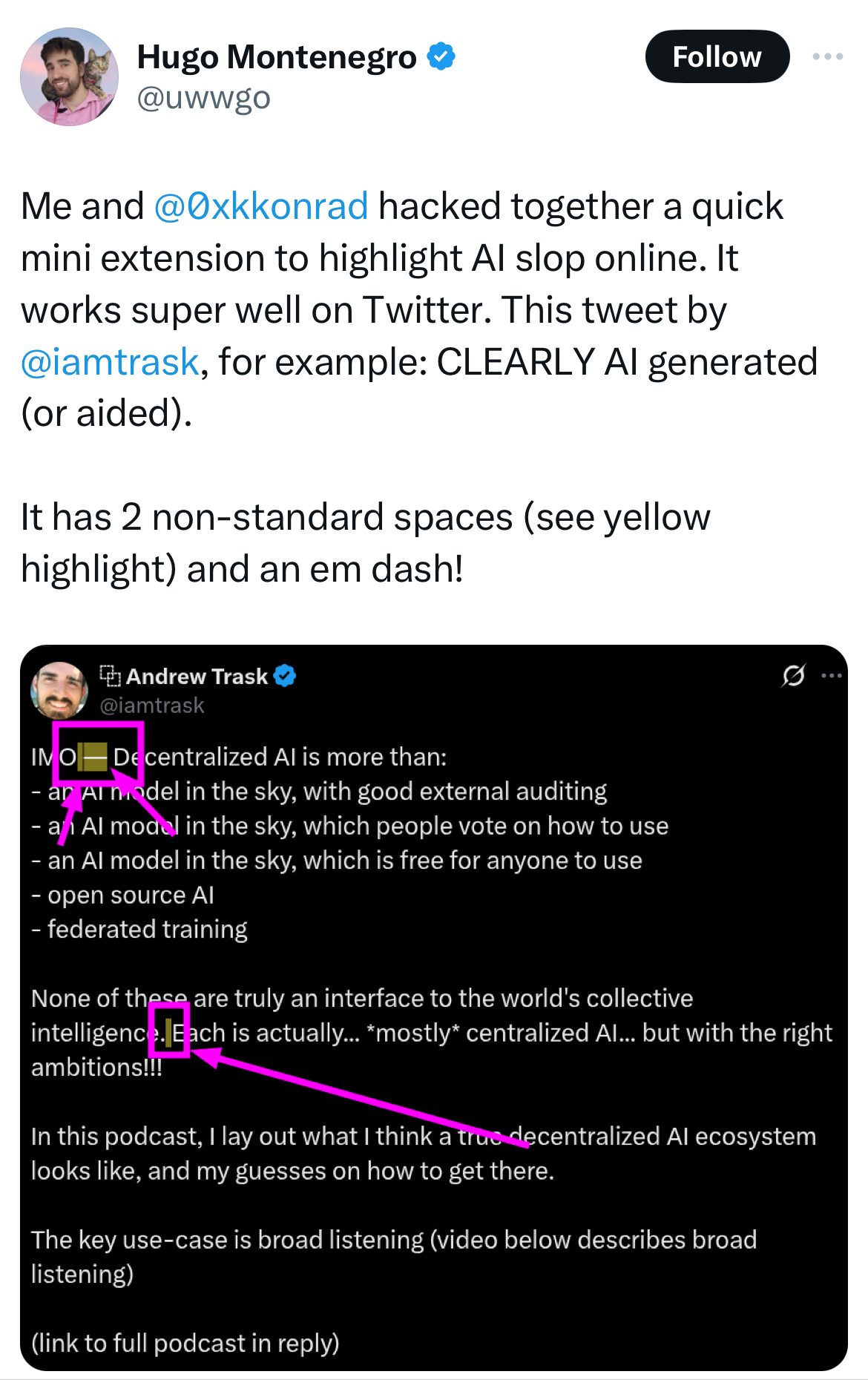

Yesterday, this post on social media triggered intense debate among AI enthusiasts or, dare I say, experts. Most of the people I follow on that platform vocally pushed back against the claim that unusual unicode characters give away that a LLM produced a specific text.

I don't believe that using LLMs to draft text is bad, and I'm not particularly comfortable with the witch hunt about AI usage. I use LLM-assistants extensively: almost anything I share - text or code - is sent to a LLM before publishing. My usage has changed over the years because I sometimes felt in hindsight that my past work was too heavily influenced by a model. It just didn't age well. For text, I feel that it ages better when I only use LLMs to identify typos, challenge potential logical flaws in my reasoning and suggest improvements, in particular for more idiomatic terms as I'm not a native English speaker. For code, it depends on the project but I like to ask for a review of my own work rather than asking for a first stab. In early 2025, I looked back at my work of May-October 2024 and I mostly hated it. In my case the culprit was Claude Sonnet but I believe that it would have been the same with an OpenAi model, a Gemini model, or basically any model. The fact is that there are patterns that you cannot unsee once your brain has associated them with AI slop(1). And, in truth, it hurts when you spot these patterns in your own work. But I like to be able to run something past a smart assistant. If a smart human could provide feedback and teach me things across all my areas of interest, always be available and charge me basically nothing for this service, I would happily use them. But if we are realistic, LLMs are the only option.

Patterns in AI-Generated Content

The patterns mentioned above include, but are not limited to, unusual unicode characters. That's why I personally believe that the claim made in the social media post has merits, although it is certainly more nuanced than it appears at first glance. Based on my own usage of LLMs to generate text or using LLM responses for research, I have picked up the habit of looking for certain characters. And when I use LLMs to aid my writing, I often clean up the output to remove unusual ones.

For example, it is undeniable that the use of emdashes without spaces is an artifact of AI-generated content. In my experience, this pattern is so consistent that arguing otherwise is a tough position to defend. Before LLMs, I used dashes the way AI uses emdashes today so only the character itself bothers me. I personally replace emdashes with " - " (space-dash-space) and it still fits my style. Reviewing other people's work, I've sometimes questioned people who used emdashes in similar ways (always with spaces around) while I was positive that they didn't use LLMs. I'm sure that they didn't knowingly type a special character, it must have come from their text editor or note-taking app. These tools frequently introduce such artifacts, just like they change apostrophes or quotation mark characters (Apple Notes can drive you crazy for that reason).

The post also mentioned special space characters, and this is also something I typically try to clean up if I've copied a string of LLM-generated text. Again, their presence does not guarantee that the text was AI-generated because text editors and note-taking apps can swap characters, but I had noticed these characters in LLM outputs in the past and picked up the habit to run a short script to remove them. My assumption was that it was a sort of watermark by frontier labs that do not allow training on their models' outputs. But as part of the debate that followed the social media post, someone I value the opinion of was adamant that the claim about unusual space characters was baseless. I realized that I don't even check if special space characters are removed these days, a script just handles them at the same time as the emdashes. So I was curious to see if (1) the data supported my prior opinion on the matter and if (2) the frequency of special space characters in LLM outputs had evolved since last year.

Using the Speechmap data

To answer these questions, I turned to the Speechmap dataset, which I've used a lot recently. For this analysis, I used the version of the dataset I had saved locally which included over 330k LLM responses from nearly 160 models. Of these responses, c.225k were not refusals or errors so I focused on this subset which is more representative of the content a LLM should produce. The table below presents the results, sorted by frequency of unusual unicode characters.

Some of my immediate takeaways were:

- That OpenAi tops the list did not really come as a surprise. They were the reason for my hypothesis that these characters could be used as watermarks. However, I seemed to remember removing such characters from Sonnet and Gemini outputs but I couldn't find any evidence to support an abnormal use of these characters by Anthropic or Google.

- You can tell which OpenAi model produced the data for Microsoft's Phi-4 Reasoning plus... that's the point of a watermark.

- Llama models use a lot of no-break spaces (U+00A0) which is a distinctive signature. Intuitively, I would have thought that there is a prevalence threshold beyond which these characters should be reflected in the tokenizer but no one ever mentioned it. So it may be that the threshold is higher.

- Interestingly the prevalence of unusual space characters in OpenAi's GPT-4.5 and GPT-5 outputs is virtually 0. So, except for OpenAi’s open-source models, it looks like the unusual space characters are a last-year thing.

| Model | Unusual/Std Ratio | ' ' U+2003 (EM SPACE) | ' ' U+202F (NARROW NO-BREAK SPACE) | ' ' U+00A0 (NO-BREAK SPACE) | ' ' U+2002 (EN SPACE) | ' ' U+2009 (THIN SPACE) | ' ' U+3000 (IDEOGRAPHIC SPACE) | ' ' U+200A (HAIR SPACE) | ' ' U+2005 (FOUR-PER-EM SPACE) |

|---|---|---|---|---|---|---|---|---|---|

| openai/o3-mini | 0.597% | 0.593% | 0.000% | 0.000% | 0.004% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-4-reasoning-plus | 0.566% | 0.541% | 0.000% | 0.001% | 0.024% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-oss-120b | 0.446% | 0.000% | 0.443% | 0.003% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-oss-20b | 0.191% | 0.000% | 0.176% | 0.014% | 0.000% | 0.001% | 0.000% | 0.000% | 0.000% |

| openai/o3-2025-04-16 | 0.183% | 0.000% | 0.000% | 0.181% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/o4-mini-2025-04-16 | 0.073% | 0.009% | 0.000% | 0.053% | 0.003% | 0.009% | 0.000% | 0.000% | 0.000% |

| openai/chatgpt-4o-latest | 0.016% | 0.007% | 0.008% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-4-reasoning | 0.015% | 0.011% | 0.003% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/chatgpt-4o-latest-20250428 | 0.012% | 0.000% | 0.006% | 0.000% | 0.006% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3.3-8b-instruct | 0.011% | 0.000% | 0.000% | 0.011% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3.1-70b-instruct | 0.011% | 0.000% | 0.000% | 0.011% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3.1-8b-instruct | 0.008% | 0.000% | 0.000% | 0.007% | 0.000% | 0.001% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3.1-405b-instruct | 0.006% | 0.000% | 0.000% | 0.006% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| moonshotai/kimi-k2-instruct | 0.005% | 0.002% | 0.000% | 0.000% | 0.002% | 0.001% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3.2-11b-vision-instruct | 0.004% | 0.000% | 0.000% | 0.002% | 0.000% | 0.000% | 0.001% | 0.000% | 0.000% |

| nousresearch/hermes-4-405b | 0.002% | 0.002% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen-2.5-7b-instruct | 0.002% | 0.000% | 0.002% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/o1 | 0.002% | 0.000% | 0.001% | 0.000% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% |

| moonshotai/kimi-vl-a3b-thinking | 0.001% | 0.000% | 0.000% | 0.001% | 0.000% | 0.000% | 0.001% | 0.000% | 0.000% |

| meta-llama/llama-3.2-90b-vision-instruct | 0.001% | 0.000% | 0.000% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen2.5-vl-72b-instruct | 0.001% | 0.000% | 0.000% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-4-multimodal-instruct | 0.001% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwq-32b | 0.001% | 0.000% | 0.000% | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3.3-70b-instruct | 0.001% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-3-medium-128k-instruct | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nousresearch/hermes-4-70b-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| tngtech/DeepSeek-TNG-R1T2-Chimera | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openrouter/optimus-alpha | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-r1-0528 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-4-maverick | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openrouter/quasar-alpha | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen3-235b-a22b | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen-2.5-72b-instruct | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nousresearch/hermes-4-70b | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/magistral-medium-2506 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3-8b-instruct | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4.1-2025-04-14 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-4 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| thudm/glm-4-z1-32b-0414 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-small-3.2 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-1.5-pro-002 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-1.5-flash-002 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen-max | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| thudm/glm-4-32b-0414 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-1.5-flash-8b-001 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4-1106-preview | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4-0314 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-small-2503 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-5-chat-2025-08-07 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| perplexity/r1-1776 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen3-14b | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemma-3-4b-it | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nvidia/llama-3_3-nemotron-super-49b-v1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-5-2025-08-07 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| tngtech/deepseek-r1t-chimera | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| x-ai/grok-2-1212 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemma-3n-e4b-it | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-4-scout | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-opus-20240229 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-5-nano-2025-08-07 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4.5-preview | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/o1-mini-2024-09-12 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openrouter/horizon-beta | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen3-30b-a3b | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen3-32b | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-opus-4 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.0-flash-001 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-r1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/o1-preview | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4o-2024-11-20 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/magistral-small-2506 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nvidia/llama-3_1-nemotron-ultra-253b-v1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-pro-1.5 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| x-ai/grok-3-beta | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| x-ai/grok-3-mini-beta | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-sonnet-4-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-chat | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-haiku-20240307 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3.7-sonnet | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/mai-ds-r1-fp8 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4-turbo | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-opus-4-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| rekaai/reka-flash-3 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-large-2411 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nvidia/llama-3_1-nemotron-nano-8b-v1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3.5-sonnet | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-pro-preview-06-05 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| amazon/nova-micro-v1.0 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| amazon/nova-pro-v1.0 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-saba-2502 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/ministral-8b-2410 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/magistral-medium-2506-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen3-235b-a22b-thinking-2507 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-7-sonnet-20250219-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| meta-llama/llama-3-70b-instruct | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemma-2-27b-it | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-5-sonnet-20240620 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-1.0-pro-002 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-chat-v3-0324 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4.1-nano-2025-04-14 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mixtral-8x22b-instruct-v0.1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-3.5-mini-instruct | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4-turbo-preview | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-r1-zero | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4-0613 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-v3.1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4o-2024-08-06 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-flash-preview-05-20 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| microsoft/phi-3-mini-128k-instruct | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-small-2409 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4o-mini-2024-07-18 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemma-3-12b-it | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemma-3-27b-it | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-flash-preview-04-17-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-opus-4.1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-7b-instruct-v0.2 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-large-2407 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.0-flash-lite-001 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-flash-lite | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mixtral-8x7b-v0.1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-flash-lite-preview-06-17 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-1.5-flash-001 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-medium-2312 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| z-ai/glm-4.5 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-small-2501 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-3.5-turbo-1106 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-5-haiku-20241022 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| minimax/minimax-m1-40k | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-pro-preview-03-25 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemma-2-9b-it | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4.1-mini-2025-04-14 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-medium-3.1-2508 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-flash-preview-04-17 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-pro-preview-05-06 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| z-ai/glm-4.5-air | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-nemo-2407 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| deepseek/deepseek-v3.1-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| x-ai/grok-4-07-09 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nousresearch/hermes-4-405b-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-7-sonnet-20250219 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| nvidia/Llama-3_3-Nemotron-Super-49B-v1_5 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/o1-preview-2024-09-12 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-7b-instruct-v0.1 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| baidu/ernie-4.5-300b-a47b | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-medium-3-2505 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| mistralai/mistral-7b-instruct-v0.3 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-sonnet-4 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-5-sonnet-20241022 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-2.5-flash-preview-05-20-thinking | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| anthropic/claude-3-sonnet-20240229 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-3.5-turbo-0125 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| amazon/nova-lite-v1.0 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-4o-2024-05-13 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| qwen/qwen3-235b-a22b-2507 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-3.5-turbo-0613 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| x-ai/grok-beta | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| google/gemini-1.5-pro-001 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

| openai/gpt-5-mini-2025-08-07 | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% | 0.000% |

In conclusion, I continue to believe that there are patterns, including the use of unusual unicode characters, that point to AI writing assistance. Taken in isolation, they are not sufficient to draw conclusions, but when multiple of these patterns are present, they can provide strong evidence. However, these patterns are bound to evolve as we see with OpenAi's models.

Regardless, I consider AI involvement as acceptable. Those who reject it out of principle are missing out on valuable assistance in my opinion. As my example illustrates, finding the right balance requires trial and error. So the earlier you start toying with these tools, the faster you learn the important lessons to avoid AI slop.