Retrieval And Classification including LATE interaction on Apple Silicon.

mlx-raclate is a versatile library built on Apple's MLX framework. It provides a unified interface to train and run classifiers and embedding models — including ModernBERT and Late Interaction (ColBERT-style) models — natively on macOS. Despite recent innovations on encoders or decoder-based classifiers / embedding models, the MLX ecosystem lacked tools to finetune such small pretrained models locally. Raclate is an attempt to address this niche in the market.

This project evolved from modernbert-mlx to support a wider range of architectures and tasks. It is currently feature-complete but in an early release stage; bugs may occur.

Key Features

- Apple Silicon Native: Fully optimized for M-series chips using MLX.

- Unified Pipeline: A single interface to load and run Masked LM, Text Classification, and Sentence Similarity tasks.

- Late Interaction Support: First-class support for MaxSim (ColBERT-style) retrieval, particularly with LFM2 and ModernBERT architectures.

- Full Fine-Tuning: Specialized trainer for fine-tuning small-to-mid-sized models (ModernBERT, Qwen2.5/3, LFM2, Gemma) on local hardware.

Installation

Install via uv or pip:

uv add mlx-raclate

# or

pip install mlx-raclateFrom source:

git clone https://github.com/pappitti/mlx-raclate.git

cd mlx-raclate

uv syncSupported Architectures

mlx-raclate supports architectures specifically useful for efficient local retrieval and classification:

- ModernBERT: (e.g.,

answerdotai/ModernBERT-base) - LFM2: Liquid Foundation Models (e.g.,

LiquidAI/LFM2-350M,LiquidAI/LFM2-ColBERT-350M) - Qwen3 Embedding: (e.g.,

Qwen/Qwen3-Embedding-0.6B) - Gemma3 Embedding: (e.g.,

google/embeddinggemma-300m) - T5Gemma Encoder: Stripping out the decoder part of T5Gemma models (e.g.,

google/t5gemma-2b-2b-ul2)

More architectures can easily be added ; the library doesn’t rely on pre-conversion to mlx. Any model with safetensors can be loaded and the loader automatically uses the precision defined in the config file (bfloat16, fp16, fp32… defaulting to fp32)

Inference: Overview

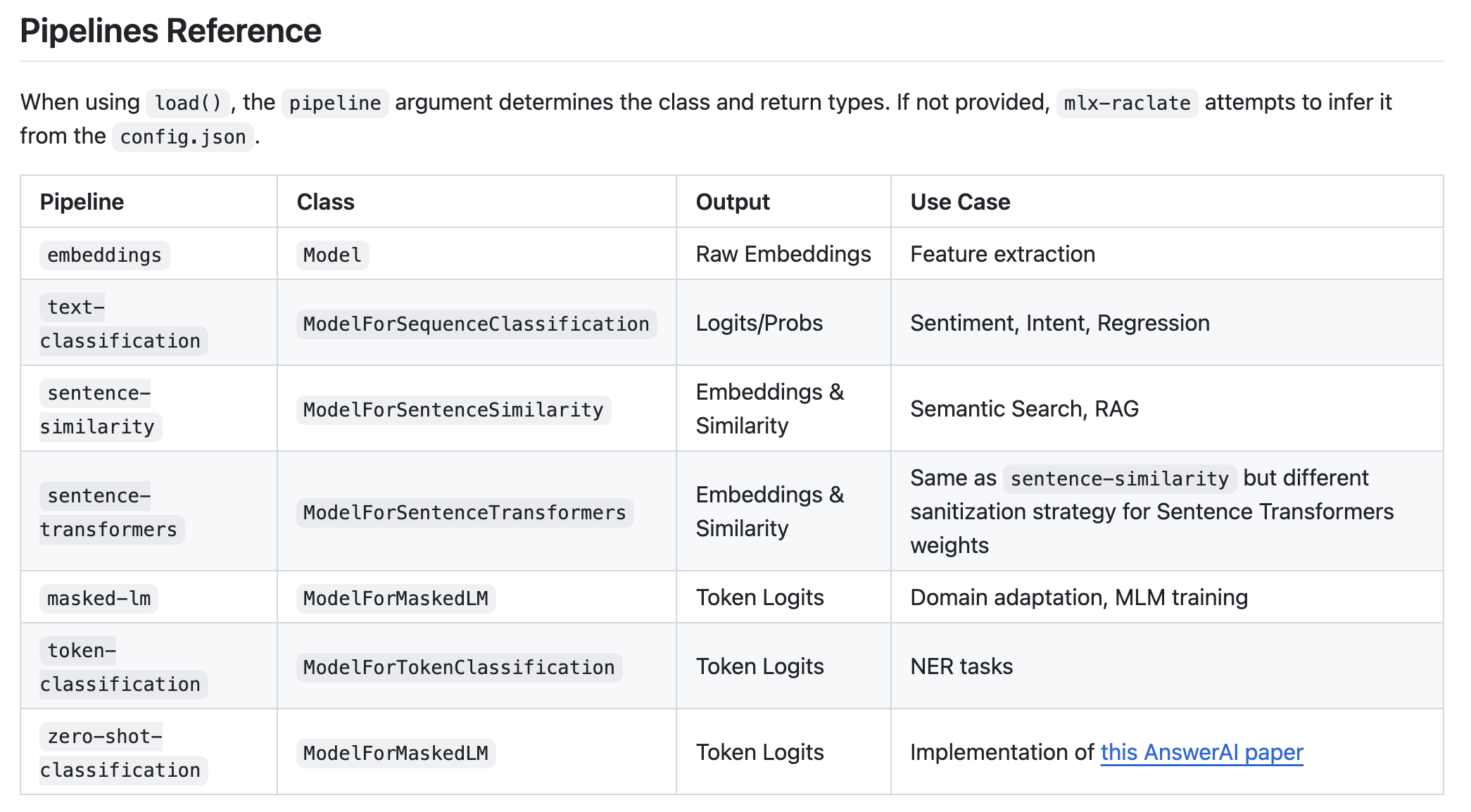

The library uses a pipeline concept similar to Hugging Face Transformers. You can specify a pipeline manually, or let the loader infer it from the model configuration. If no pipeline is found, the Model class is loaded, which returns normalized embeddings.

Detailed code for each pipeline is available in the test directory of the Github repository. See tests/inference_examples.

Pipelines are not only *inspired* by Transformers : the idea was to replicate the Transformers pipeline architectures for each model family (to the extent Transformers has a pipeline for a model family) so the models can be used with Transformers. For example, this Qwen-based wine classifier was trained with Raclate (in bfloat16) and natively compatible with Transformers.

Server

mlx-raclate includes a FastAPI server for classifier inference. See mlx_raclate.utils.server.

Training (Tuner)

mlx-raclate includes a robust training engine specifically designed for fine-tuning these architectures on Apple Silicon.

- Full Fine-tuning (LoRA is not currently supported/needed for these model sizes).

- Tasks: Text Classification, Sentence Similarity (Bi-Encoder & Late Interaction), and Masked LM. The trainer supports standard dense retrieval, classification, and masked language modeling, as well as Late Interaction training patterns. It automatically switches between MNRL (Multiple Negatives Ranking Loss) for triplets/pairs or MSE/Cosine Loss for scored pairs

- Efficiency: Gradient Accumulation, Gradient Checkpointing, and Smart Collation.

For detailed training documentation, supported datasets, and CLI usage, please see TUNER.md.

Next Steps

- More architectures

- Toying with encoder-based diffusion language models

- Revamping trainer to handle differently classification heads and base models weights

Acknowledgements

- MLX team for the framework.

- Transformers for the configuration standards.

- MLX-Embeddings for inspiration on broader embeddings architectures. MLX-Raclate focuses on longer-context models but you should definitely look there for BERT, XLM_RoBERTa and image embeddings.

- PyLate for inspiration on Late Interaction mechanics.